Highlight reel:

Timeline

H2 2024

Co-creators

I worked with my product partner in outlining the strategy and rooting our hypotheses in research. I worked across growth, marketing, legal, and GTM to balance stakeholder considerations. I also collaborated with our engineering partners to assess technical limitations and determine strategy to scale up.

Methods

User research, Usability testing, Hotjar analysis, DiD testing

Problem

Renters leave Apartment List to find information to help them make a decision because we don’t have the information they need on our site.

$100M

of missed revenue from unattributed leases.

Increased traffic by 40%

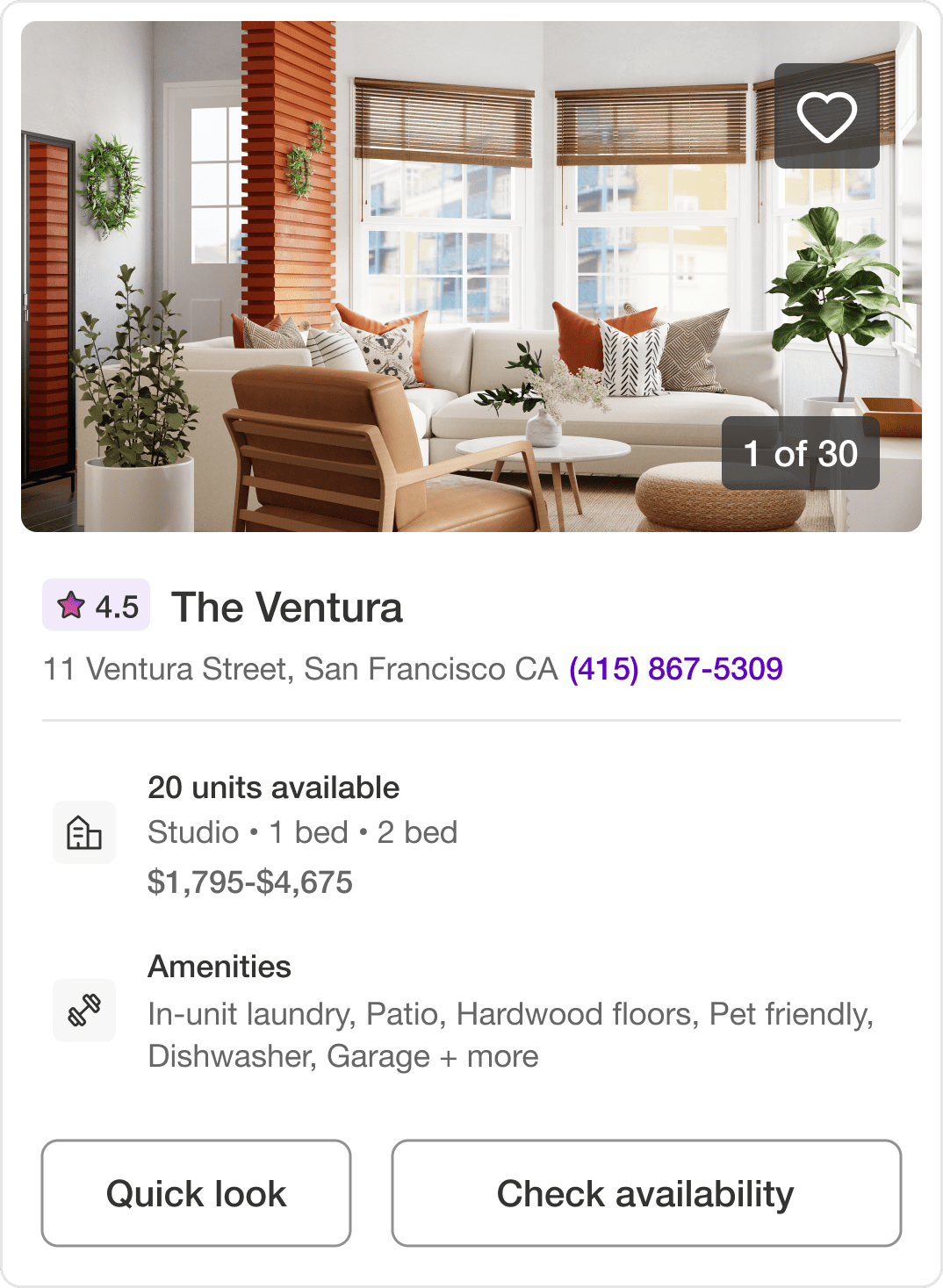

Added rating and review snippets to listing cards search result pages.

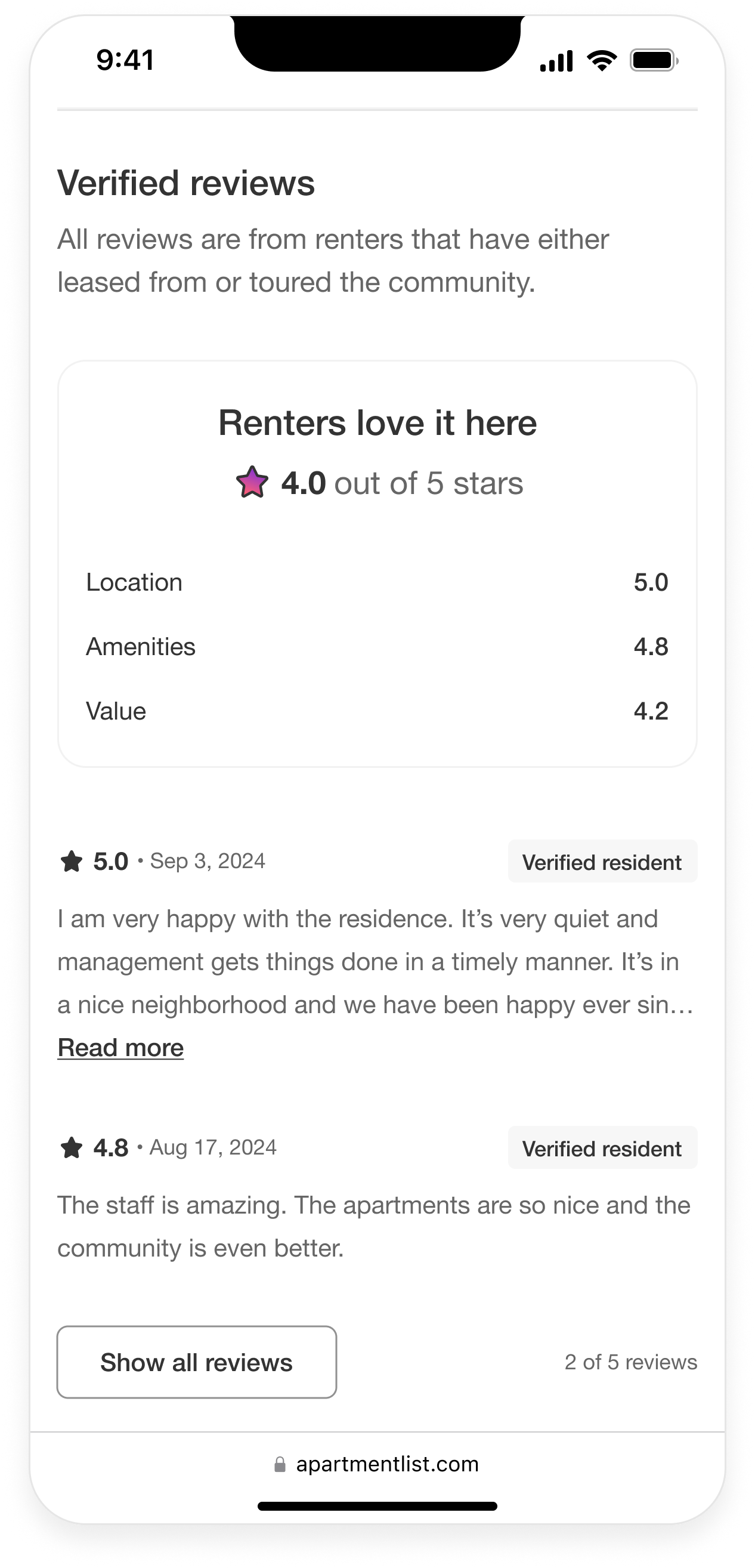

Increased conversions by 10%

Added verified reviews with category highlights to listing pages.